serverlesseventbridgeDynamoDBpatternpoc

- Published on

Publishing EventBridge events with DynamoDB Streams

Raise business events into EventBridge listening to changes from your database

- David Boyne

- Published on • 8 min read

In the previous blog post we explored how you can send large payloads using EventBridge with the claim check pattern, and now let's explore how we can use DynamoDB to raise EventBridge events.

When designing event-driven architectures it’s important to understand the behaviour of your system, identify domains and raise events that are both technical but also important to your business.

When we design event-driven applications, we often find ourself wanting to add or update items into a database and send an event afterwards. So, we might be tempted to implement a solution to our service to achieve this:

// insert data into db

await insertIntoDatabase(user)

// INSERT COMPLETE....

// send event (could fail!?)

await sendEvent('UserCreated', user)

Most of the time this pattern may work… but what happens when the insert into the database is successful but we fail to send the message? This could lead to data inconsistencies and bugs downstream that rely on these events.

The service (code) above is also responsible for storing data and raising events, but what if we could simplify this code and reduce its dependencies? What if we removed the call to raise the event and instead relied on our architecture to do this?

Using Amazon DynamoDB we can listen for changes on our data (change data capture (CDC)) and raise events for downstream consumers, this is a powerful pattern that allows us to react in near-real time to changes within our database and gives us the option to raise EventBridge events for downstream consumers.

In this blog post we will explore DynamoDB streams with Amazon EventBridge and understand patterns we can use to simplify our event-driven architectures.

Grab a drink, let’s dive in.

•••Why use change data capture events with DynamoDB?

DynamoDB is a key-value and document database that delivers single-digit millisecond performance at any scale and there are no servers to provision, patch, or manage and no software to install, maintain, or operate, great solution for storing data for our event-driven applications.

One awesome feature of DynamoDB is the ability to listen to changes and react to them downstream. An example of this could be when a new customer is added to the database, you could use this event to invoke a service to send that customer an email, all decoupled, and asynchronous.

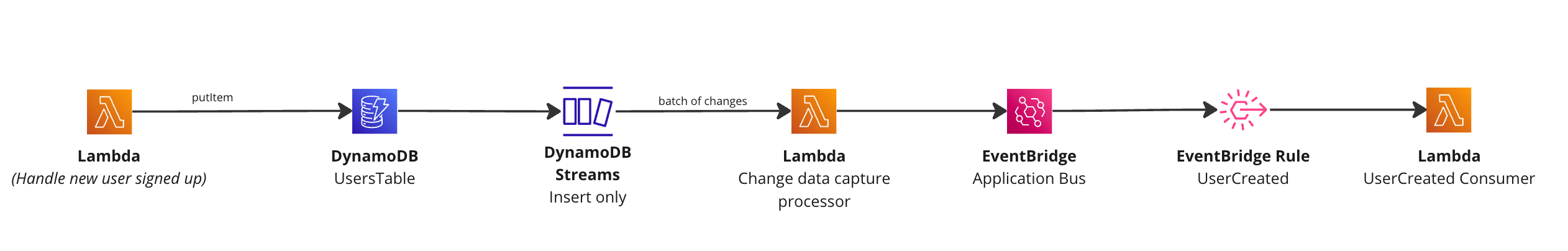

Example of DynamoDB Streams and event source to Lambda function

Example of DynamoDB Streams and event source to Lambda functionDynamoDB Streams offer us a few options when we setup a stream. When changes occur, you can get the updated item, the previous item, both or just the keys:

OLD_IMAGE- The entire item, as it appeared before it was modified.NEW_IMAGE- The entire item, as it appears after it was modified.KEYS_ONLY- Only the key attributes of the modified item.NEW_AND_OLD_IMAGES- Both the new and the old images of the item.

DynamoDB Streams allow our event-driven applications to be super flexible in what changes we want to manage and listen for, even better we can raise EventBridge events based on changes on our DynamoDB table in near-real time.

So, the question I often think about is… using DynamoDB Streams can we simplify our EventBridge producers by raising events directly from changes from DynamoDB?

What if we used these change data capture events to process and raise business events using Amazon EventBridge? We could raise events on the behaviour of our system vs raising them ourself….

Let’s dive into a pattern that helps us understand this….

•••Using DynamoDB Streams to raise events with EventBridge

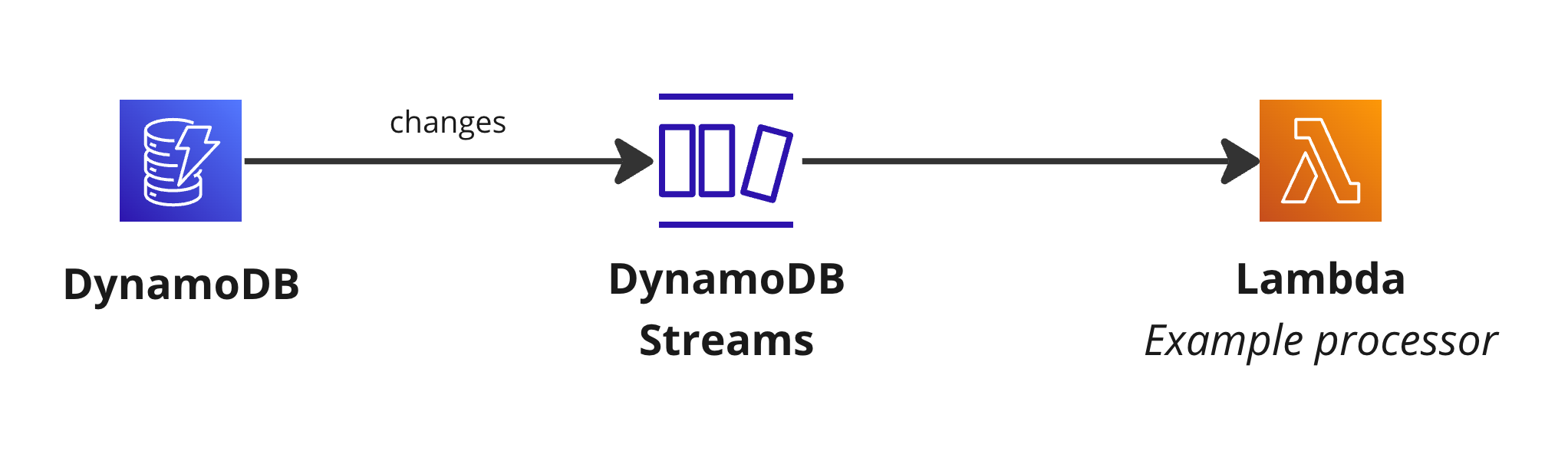

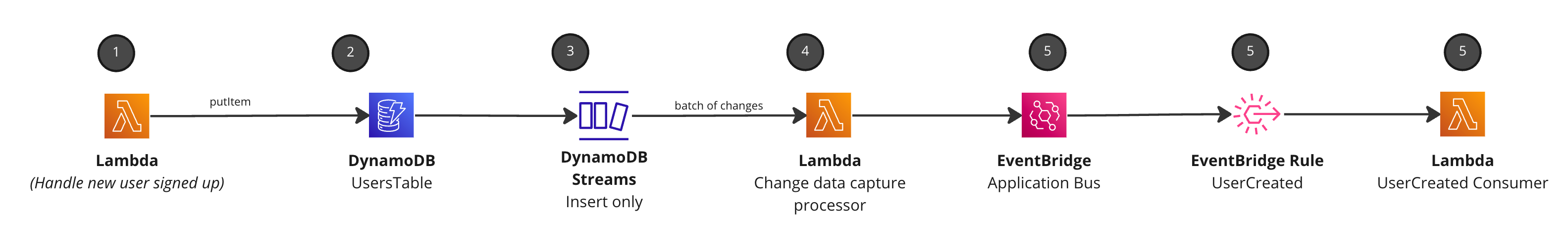

Example pattern using DynamoDB Streams with EventBridge to raise business events

Example pattern using DynamoDB Streams with EventBridge to raise business events- Lambda function is triggered and inserts data into DynamoDB (new user in this example). This Lambda would be triggered from other event in reality, e.g API Gateway for example. The Lambda itself could raise the event directly, but we want to avoid the coupling and synchronous behaviour mentioned earlier in this blog post.

// Insert dummy data into DDB

export async function handler(event: any) {

const data = await client.send(

new PutItemCommand({

TableName: process.env.TABLE_NAME,

Item: marshall({

id: v4(),

username: faker.internet.userName(),

email: faker.internet.email(),

avatar: faker.image.avatar(),

birthdate: faker.date.birthdate().toISOString(),

registeredAt: faker.date.past().toISOString(),

}),

})

)

}

- DynamoDB table stores the user information and stream setup to listen for

NEW_IMAGE

// example user table

const userTable = new Table(this, 'UsersTable', {

billingMode: BillingMode.PAY_PER_REQUEST,

removalPolicy: cdk.RemovalPolicy.DESTROY,

partitionKey: { name: 'id', type: AttributeType.STRING },

// Setup the stream

stream: StreamViewType.NEW_IMAGE,

})

- DynamoDB event source setup against our processing Lambda function (which raises EventBridge events). Our Lambda function will get triggered when new items get added into your DynamoDB table.

// stream change events to the lambda function

lambdaFunction.addEventSource(

new DynamoEventSource(userTable, {

startingPosition: StartingPosition.LATEST,

batchSize: 1,

})

)

- Lambda connects to the stream to process the change events, and processed them into

Domainevents (in this exampleUserCreated)

Convert the AWS events into events our domain/business can understand. For example our business might want to know when users are created to send them emails or capture analytics downstream.

export async function handler(events: DynamoDBStreamEvent) {

// transform events into "business/domain events"

const mappedEvents = events.Records.map((record) => {

return {

Detail: JSON.stringify(unmarshall(record.dynamodb?.NewImage as any)),

DetailType: 'UserCreated',

Source: 'myapp.users',

EventBusName: process.env.EVENT_BUS_NAME,

Resources: [record.eventSourceARN || ''],

};

});

await client.send(

new PutEventsCommand({

Entries: mappedEvents

})

);

}

- Events are sent to our custom business event bus, and rules setup for downstream consumers. In this example we have a basic Lambda function listening for the new

UserCreatedevent.

That’s it.

We have setup a pattern the listens to new items being added into the DynamoDB table and raise business events from it. The interesting thing here is we are listening to the behaviour of our architecture and raising events directly from it.

This is basic example but you can use a combination of DynamoDB change events and EventBridge to achieve many use-cases.

Things to consider using this pattern

- Think about failures. On step 4 you might want to attach a DLQ (Dead letter queue) to capture any failures in processing the events from the DynamoDB stream.

- All data in DynamoDB Streams is subject to a 24-hour lifetime. You can retrieve and analyze the last 24 hours of activity for any given table. Data that is older than 24 hours is susceptible to trimming (removal) at any moment.

- DynamoDB charges for reading data from DynamoDB Streams in read request units

Summary

When using Amazon EventBridge we have many options to raise events for downstream consumers, and for many the default option it to lean towards using the SDK to raise events directly in our code. Although this is a great pattern to follow, it’s worth considering other patterns that are available for our event-driven architectures.

Every time we add an SDK call to raise events into EventBridge we are creating a coupling between our service and EventBridge, and if you are raising events after a transaction (insert, update or delete) you may run into data inconsistency or bugs if the event fails to raise but the transaction completes.

The outbox pattern was deigned to help you get more resilient messaging solutions for the occasions you want to store/update information into a database then send a message/event straight afterwards as seen at the start of this blog post.

DynamoDB Streams give us more integration patterns to explore to build our event-driven applications, and I recommend exploring them and start to think about how you can leverage them to create your event-driven architectures.

If you are interested in using DynamoDB Streams with EventBridge, you can read the code here and explore the pattern more on serverlessland.com.

•••I would love to hear your thoughts on the pattern or if you have any other ideas for similar patterns, you can reach me on Twitter.

If you are interested in EventBridge patterns I have more coming out soon, and will be covering patterns like:

- Enrichment pattern with Amazon EventBridge (Out now)

- Claim check pattern with EventBridge (Out now)

- Enrichment with Step Functions

- Enrichment with multiple event buses

- And more….

Extra resources

- Outbox Pattern (website)

- Outbox pattern with DynamoDB and EventBridge: ServerlessLand (code/website)

- Code for pattern in blog post (code)

- Serverless EventBridge patterns (80+)

- Talk I done around Best practices to design your events in event-driven applications (video)

- Over 40 resources on EventBridge (GitHub)

Until next time.